Thirty three thousand companies will risk fines of 600,000 euros for failing to protect data. The law requires that the files are notified to the English Agency for Data Protection, although only 10% of commercial companies in Jaén (Spain) compliant. Source: Ideal.

Monday, March 31, 2008

Ripper Walmart Black Friday

Index

Topics Index

Section 1: Introduction

Section 2: linear adjustment formulas

Section 3: The least-squares parabola

Section 4: A general matrix method

Section 5: Adjustment to non-linear formulas

Section 6: The ultimate formulas rational

Section 7: The selection of the best model

Section 8: Modeling of interaction effects

Section 9 : Other modeling techniques

Topics Index

Section 1: Introduction

Section 2: linear adjustment formulas

Section 3: The least-squares parabola

Section 4: A general matrix method

Section 5: Adjustment to non-linear formulas

Section 6: The ultimate formulas rational

Section 7: The selection of the best model

Section 8: Modeling of interaction effects

Section 9 : Other modeling techniques

Sunday, March 30, 2008

Why Do I Have A Bump Near My

1: Introduction

This work is motivated by the need for accessible practical examples a series of topics that should be a compulsory part of curriculum of any individual who is pursuing an academic career in science and engineering and, unfortunately, not part of the subjects taught in many universities which if mentioned something about it maybe it happens at the end of the introductory courses in the field of statistics, and that if you have time to talk about it after consuming most of the time introducing the student to the theory of probabilities, the hypergeometric distribution the binomial distribution, normal distribution, the t-distribution, analysis of variance over what is scope to do after dealing with these issues, leaving little time to teach the student that perhaps should be the first thing you should learn why this has vast applications in various branches of human knowledge.

start with a very practical question:

If we are not pursuing a degree in Mathematics, what is the reason to spend much of our time to a matter which is essentially part of a branch of mathematics, statistics? What is the reason why we should be motivated to further increase our already heavy burden of study with something like the data set formulas?

To answer this question, we see first that the study of mathematical techniques used to "adjust" data obtained experimentally based formulas preset is indispensable to "endorse" our theoretical scientific models with what is observed in practice all days in the laboratory. Take for example the law of universal gravitation first enunciated by Sir Isaac Newton, which tells us that two bodies of masses M 1 and M 2 attract :

con una fuerza F g que va en razón directa del producto de sus masas y en razón inversa del cuadrado de la distancia d que separa sus centros , lo cual está resumido en la siguiente fórmula:

Este concepto es tan elemental y tan importante, que incluso no se requiere llegar hasta la universidad para ser introducido a él, forma parte de los cursos básicos de ciencias naturales en las escuelas secundaria y preparatoria. Sin usar aún números, comparativamente hablando las consecuencias de ésta fórmula We can summarize the following examples:

In the upper left corner we have two equal masses whose geometric centers are separated by a distance d , which are attracting with a force F . In the second row and in the same column, both masses M are twice the original value, and therefore the force of attraction between these bodies will four times greater, or 4F , since force Attraction is directly proportional to the product of the masses. And in the third row only one of the masses is increased, three times its original value, thus the attraction will be three times higher, rising to 3F. In the right column, the masses are separated at a distance 2d which is twice the original distance, and therefore the force of attraction between them falls not in the middle but a quarter of original value because the attractive force varies inversely with the distance but not the square of the distance separating the masses. And on the third line on the right column the force of attraction between masses increases the Quad to be brought closer to the masses half the original distance. And as we see in the lower right corner, if both masses are increased twice and if the distance between their geometric centers is also increased to twice the force of attraction between them will not change.

far we have been speaking in purely qualitative terms. If we talk in terms quantitative, using numbers , then there is something we need to use the formula given by Newton. We must determine the value of G, the universal gravitational constant . While we can not do that, we will not go very far to use this formula to predict movements of the planets around the sun or the movement of the Moon around the Earth. And this constant G is not something that can be determined theoretically, like it or not we have to go to the lab to perform some kind of experiment with which we can get the value of G , which turns out to be:

experiments in which although it is tempting to immediately get a formula of "best fit" to a series of data, such a formula will do little to reach a conclusion or really important discovery that can be removed with a little cunning in the study of the accumulated data. An example is the following problem (problem 31) taken from chapter 27 (The Electrical Field) of the book "Physics for Engineering and Science Students" by David Halliday and Robert Resnick

PROBLEM: One of his first experiments (1911) Millikan found that, among other charges, appeared at different times as follows, measured at a particular drop:

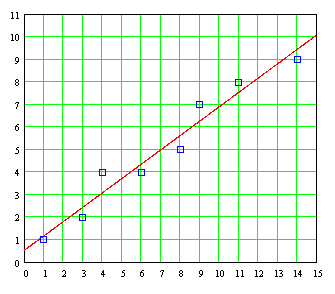

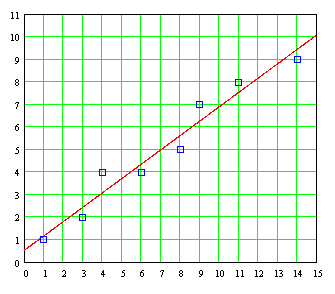

data in increasing order of magnitude, we can make a graph of them who happens to be the next (this chart and other productions in this work can be seen more clearly or even in some cases expanded with the simple expedient of enlarge):

is important to note that this chart is not an independent variable (whose value would be placed on the horizontal axis) and a dependent variable (whose value would be placed on the vertical axis) as the horizontal axis simply been assigned a different ordinal number to each of the experimental values \u200b\u200blisted, so the first item (1) has a value of 6,563 • 10 -19 , the second data (2) has a value of 8,204 • 10 -19 , and so on.

We can, if we want to obtain a straight line of best fit for these hand-drawn data. But this entirely misses the perspective of the experiment. A chart much more useful than the dot plot shown above is the following graph of the data known as graphical ladder or step graphical :

carefully inspecting the graph of this data, We realize that there are "steps" which seems to be the same height of a datum to the next. The difference between observations 1 and 2, for example, seems to be the same as the difference between observations 6 and 7. And those "gaps" where it is not, seems to be height twice the height other steps. If the height of a step to the next did not have this similarity with any of the remaining observations, we may conclude that the differences are completely random. But this is not what is happening and the steps seem to have heights equal to or double equal. These data are revealing something important, that electric charge is quantized , the electric charge reported here does not vary much from 0.7, 1.4 or 2.5, but integral multiples of one or two goals. The data we are confirming the existence of the electron , the smallest electric charge is no longer possible subdivided by physical or chemical means at our disposal. Among the data on which the "jump" from one step to another is double from that in other steps, we can conclude that data are "missing" and that an additional amount of experiments, it should be possible to find experimental values \u200b\u200bbetween those jumps "doubles" that posts in the graph, we must produce a staircase with steps of similar height could be called "basic." By way of example, the reported value of 11.50 • 10 -19 coulombs and 8,204 • 10 -19 coulombs must have an intermediate value of about 9,582 • 10 -19 coulombs with an additional recabación laboratory data should be possible to detect sooner or later.

We can estimate the magnitude of the electric charge as we now know as the electron by first obtaining the differences between the data representing a unit jump by averaging them, and after that the differences between the data representing a jump "double" also getting the same average and dividing the result of the latter two, summing and averaging the two sets of values \u200b\u200band for a final result:

Set 1 (Jump Unit):

And the average of the second data set is:

that it be divided into two:

Since there are so many data ( 4 data) in the first set and in the second set, we can give the same "simple weight" to each of the averages obtained by adding the average first to second average and dividing the result by two (of not being so, both teams have had a different number of observations, we have to give a "significant factor" arithmetic each set to give each contribution according to their relative importance):

As a postscript to this problem, which is added later experiments conducted with greater precision and minimizing sources of error with a seeking of a large number of data (which helps to gradually reduce the random error due to causes beyond the control of the experimenter) leads to a more accurate value of 1.60 • 10 -19 coulombs for the electron charge, which is the accepted value today.

This work is motivated by the need for accessible practical examples a series of topics that should be a compulsory part of curriculum of any individual who is pursuing an academic career in science and engineering and, unfortunately, not part of the subjects taught in many universities which if mentioned something about it maybe it happens at the end of the introductory courses in the field of statistics, and that if you have time to talk about it after consuming most of the time introducing the student to the theory of probabilities, the hypergeometric distribution the binomial distribution, normal distribution, the t-distribution, analysis of variance over what is scope to do after dealing with these issues, leaving little time to teach the student that perhaps should be the first thing you should learn why this has vast applications in various branches of human knowledge.

start with a very practical question:

If we are not pursuing a degree in Mathematics, what is the reason to spend much of our time to a matter which is essentially part of a branch of mathematics, statistics? What is the reason why we should be motivated to further increase our already heavy burden of study with something like the data set formulas?

To answer this question, we see first that the study of mathematical techniques used to "adjust" data obtained experimentally based formulas preset is indispensable to "endorse" our theoretical scientific models with what is observed in practice all days in the laboratory. Take for example the law of universal gravitation first enunciated by Sir Isaac Newton, which tells us that two bodies of masses M 1 and M 2 attract :

con una fuerza F g que va en razón directa del producto de sus masas y en razón inversa del cuadrado de la distancia d que separa sus centros , lo cual está resumido en la siguiente fórmula:

Este concepto es tan elemental y tan importante, que incluso no se requiere llegar hasta la universidad para ser introducido a él, forma parte de los cursos básicos de ciencias naturales en las escuelas secundaria y preparatoria. Sin usar aún números, comparativamente hablando las consecuencias de ésta fórmula We can summarize the following examples:

In the upper left corner we have two equal masses whose geometric centers are separated by a distance d , which are attracting with a force F . In the second row and in the same column, both masses M are twice the original value, and therefore the force of attraction between these bodies will four times greater, or 4F , since force Attraction is directly proportional to the product of the masses. And in the third row only one of the masses is increased, three times its original value, thus the attraction will be three times higher, rising to 3F. In the right column, the masses are separated at a distance 2d which is twice the original distance, and therefore the force of attraction between them falls not in the middle but a quarter of original value because the attractive force varies inversely with the distance but not the square of the distance separating the masses. And on the third line on the right column the force of attraction between masses increases the Quad to be brought closer to the masses half the original distance. And as we see in the lower right corner, if both masses are increased twice and if the distance between their geometric centers is also increased to twice the force of attraction between them will not change.

far we have been speaking in purely qualitative terms. If we talk in terms quantitative, using numbers , then there is something we need to use the formula given by Newton. We must determine the value of G, the universal gravitational constant . While we can not do that, we will not go very far to use this formula to predict movements of the planets around the sun or the movement of the Moon around the Earth. And this constant G is not something that can be determined theoretically, like it or not we have to go to the lab to perform some kind of experiment with which we can get the value of G , which turns out to be:

But the determination of this constant mark just the beginning of our work. We assume that this formula was given under certain laboratory conditions in which there were two masses separated by a distance known as accurately as possible. The exponent 10 -11 that appears in the numerical value the constant G tells us in a way that the effect to be measured is extremely small, which is expected, because two smaller masses the size of a marble used for the experiment will be attracted to a very weak force barely detectable almost . The existence of a force of attraction between two small masses can be confirmed in an experiment of medium complexity, this does not present major challenges. But the measure G constant and not only to find that two bodies attract represents serious difficulties. The first to evaluate the constant G was Cavendish laboratory, who used a device that was essentially a torsion balance by implementing the next schmo:

Although at first glance one thinks of the possibility of increasing all bodies used in the experiment to make the effect more intense attraction between them, no such thing can lead to out moving masses (red) without bursting the thin thread which hangs these weights. You can, however, increase the mass of blue, and this was precisely what he did Cavendish. Note that there are only two masses being attracted but two pairs allying mass, which increases the effect on the torsion balance. Either way, the enormous difficulties in obtaining a numerical value G reliable under this experiment will not increase our confidence in the value of G thus obtained.

However, a value of G obtained under these conditions and put in the formula does not guarantee that the formula will work as predicted by Newton for other conditions that are very different from the conditions used in the laboratory, which involved masses much greater distances. This formula is not the only one who can give us an attractive force between two masses decreases with increasing distance between the geometric centers of the masses. We can formulate a law that says: "Two bodies attract because direct product of their masses and inversely as the distance that separates them. "Note the absence of the term" square of the distance separating them. And we can make both formulas coincide numerically for some distance apart, but the variation in the strength of attraction given by the two formulas, it will become more and more evident as the masses are being brought closer or separated more and more. As both formulas, mathematically different, are not equally valid to describe the same phenomenon, one has to be discarded with the help of experimentally obtained data . Again we have to go to the lab. The confirmation of Newton's law is valid we get if we can measure the force of attraction for various distances by a graph of the results. For a variation inversely proportional to the square of the distance, the graph should be as follows:

one way or another, are laboratory experiments that help us to confirm or rule out any theory like this. And in experiments difficult by their very nature, which introduces a statistical random error that we can introduce experimental variation in each reading we take, we are almost obliged to collect the maximum amount of data to increase the reliability of the results, in which case the problem will be trying to draw any conclusion from the mass of data collected, because you can not expect all or perhaps none of the data will "fall" smoothly and accurately on a continuous curve. This forces us to try to find somehow the mathematical expression for a smooth, continuous curve, among many other possible ones, that best fits the experimental data.

case we have spoken this is the typical case in that before carrying out measurements in the laboratory and there is a theoretical model -a formula - waiting to be confirmed experimentally by measurements or observations made after the formula was obtained. But many other cases in which although experimental data, despite the unavoidable sources of variation and measurement errors seem to follow any law that can be adjusted to a theoretical model, such a formula does not exist, either because they have not found or perhaps because it is too complex to be stated in a few lines. In such cases the best we can do is carry out the fit of the data obtained experimentally to a empirical formula, a formula selected from many to be the one that best fits the data. The most notorious example of this today has to do with the alleged global warming on Earth, confirmed independently by several experimental data collected over several decades in many places around the Earth. Still no exact formula or even an empirical formula that allows us to predict the Earth's temperatures will be in later years if things continue as usual. All we have are graphs in which, broadly speaking, one can see a tendency towards a gradual increase in temperature, inferred from the data trend ( trend data), and even some of these data are cause controversial, such as temperature data registered in Punta Arenas, Chile, between 1888 and 2001:

Often, when carrying out the plotting of the data (the most important step prior to the selection of the mathematical model which will try to "adjust" the data) since before attempting to carry out the adjustment data to a formula we can detect the presence of an abnormality in the same due to an unexpected source of error that has nothing to do with the error of a statistical nature, as shown in the following graph of the temperatures of lakes in Detroit:

Note

carefully on this chart that there are two points that were not linked with lines for researchers to highlight the presence of a serious anomaly in the data. These are the points representing the end of 1999 and early 2000. As we begin 2000, the data show a "jump" disproportionate to the data prior history. Although we try to force all data are grouped under a certain trend predicted by an empirical formula, an anomaly as seen in this graph we practically cries out for explanation before being buried in such empirical formula. A review of the data revealed that, indeed, the "jump" disproportionate had to do with a phenomenon that was already expected at that time was going to happen with some computer systems are not prepared for the consequences of the change of digits in the date 1999 to 2000, then dubbed as the Y2K phenomenon (an acronym for the phrase " Y ear 2000" where k symbolizes thousand). The discovery of this effect gave rise to an exchange of explanations documented in places like the following:

http://www.climateaudit.org/?p=1854

http://www.climateaudit.org/?p=1868

This exchange for clarification led to the same U.S. space agency NASA to correct their own data, taking into account the effect Y2k, and corrected data displayed on the following site:

http://data.giss.nasa.gov/gistemp/graphs/

Fig.D.txt

The examples we have seen have been instances in which the experimental data despite variations in the same setting allows them to approximate a mathematical formula or even allow the detection of some error in gathering them. But there are many other occasions in which to carry out the data to plot the presence of a trend is not at all obvious, as shown in the following sample data collected on the frequency of sunspots (which may have some effect on global warming of the Earth):

In the graph of the data has been superimposed a red line under a statistical mathematical criterion represents the line of best fit to the data. But in this case this line is clearly an obvious decline, including online almost seems to be a horizontal line. If you delete line, the data seem so scattered that have chosen a straight line to try to "bundle" the trend of data seems rather an act of faith than scientific objectivity. It may be no reason to expect a statistically significant change on the frequency of sunspots over the course of several centuries or even several thousand years, given the enormous complexity of the nuclear processes that keep the Sun in constant activity. This last example demonstrates the enormous difficulties that face any researcher trying to analyze a set of experimental data on which there is any theoretical model.

The data set formulas is of vital importance always bear in mind the law of cause and effect . In the case of the law of universal gravitation, as set forth by an exact formula, assuming the masses of two bodies as unchanged, then a variation of the distance between the masses will have a direct effect on the strength of gravitational attraction is between them. A series of experimental data on a chart positions will confirm this. And even in cases where there is an exact model, we can (or rather, we need to) make a cause-effect relationship for a model of two variables can have some meaning. Such is the case when performing measurements of stature between students of different grades of elementary school. In this case, the average heights for each grade students will be different, it will increase as increasing the grade, for the simple fact that students at this age are growing in stature every year. In short, the higher the grade, the higher the average height of students who expect to find in a group. This is a cause-effect relationship. In contrast, if we find a direct relationship between the temperature of a city for one day of the year and the number of pets that people have in their homes, most likely will not find any relationship and come out empty handed because there is no reason to expect that the average number of pets per household (case) may have some influence on the temperature (effect), and if so, this effect would be negligible by mathematically smallness.

we have seen cases involve situations based on natural phenomena which we can carry out measurements in the laboratory or outside the laboratory using something as simple as a thermometer or as an amateur telescope. But there are many other cases in which it is not necessary to conduct measurements, because more than getting data in the lab what is needed is a mathematical model that allows us to make a projection or prediction with data already on hand, as data from a census or a survey. An example is the expected growth of Mexico's annual population. The national population census is to be in charge of obtaining figures on the population of Mexico, so that to try to make a prediction on the expected population growth in future years all we have to do is go to National Institute of Statistics, Geography and Informatics (INEGI) for the results of previous censuses. It is assumed that in these census figures are not exact, there is no reason to expect such a thing, given the enormous number of variables that have to deal with workers who must carry out the census and the changing day to day can affect the "reality" of the census. Even assuming that the census could be carried out exactly, we would be another problem. If we plot the population ratios of 5 years (for example), there would be no problem in making future predictions based on past data if the data when plotted fall all in a "straight line". The problem is that, when plotted almost never fall on a straight line, usually grouped around what appears to be a curve . Here we try to "adjust" the data to a Various formulas and use the one that best approximates all the data you already have, for which we need a mathematical-statistical approach that is less subjective as possible. It is precisely for this so we require the principles to be discussed here.

The data adjustment formulas, there are cases in which it is not necessary to go into detailed mathematical calculations for the simple reason that for such cases have been obtained and formulas that only require the calculation of simple things such as the arithmetic mean (often designated as μ, the Greek letter mu equivalent of the Latin letter "m", so on average arithmetic) data and standard deviation σ (the Greek letter sigma , the equivalent of the Latin letter "s" so the "standard") of them. We are referring to adjust the data to a Gaussian curve . One such adjustment is applied to situations where instead of having a dependent variable and whose values \u200b\u200bdepend on the values \u200b\u200bthat can make an independent variable X on which perhaps we can exercise some control data set in which what matters is the frequency with which data are collected are located within certain ranges. An example would be grades in certain subjects in a group number of 160 students whose scores show a distribution as follows:

The way in which data are presented, we must make a slight modification in our calculations to obtain the average arithmetic them, using as the representative value of each interval the average value between the minimum and maximum of each interval. Thus, the representative value of the interval between a score of 4.5 and 5.0 is 4.75, the representative value in the range between 5.0 and 5.5 is 5.25, and so on. Each of these values \u200b\u200brepresentative of each interval we have to give weight "fair" that belongs in the calculation of the mean multiplied by the frequency with which it occurs. Thus, the value 4.75 is multiplied by 4 since that is the frequency with which it occurs, and the value 5.25 is multiplied by 7 since that is the frequency with which it occurs, and so on. Thus, the arithmetic mean of the population of 150 students will:

Having obtained the average arithmetic X , the next step would be to obtain the dispersion of these data with respect to the arithmetic average, through a calculation and variance σ ² in which we average the sum of the squares of the differences d i of each data with respect to the arithmetic mean for the variance σ ² population data:

with which we can obtain the standard deviation σ population data (also known as the root mean square deviation-of-range data to the arithmetic mean) with the simple operation of taking the square root of variance: σ

worth noting that the standard deviation σ evaluated for a sample taken at random from a population has a slightly different definition of the standard deviation σ evaluated on all data the total of the population. The standard deviation σ sample of a population is obtained by replacing the term N in the denominator N-1, because the words of the "purists" value obtained is a better estimate of the standard deviation of the population from which the sample was taken. However, for sufficiently large sample (N greater than 30), there is little difference between the two estimates of σ . Anyway, when you want to get a "best estimate" can always be obtained by multiplying the standard deviation we have defined √ N/N-1 . It is important to bear in mind that σ is a somewhat arbitrary measure of data dispersion, is something that we have defined ourselves, and we use N-1 instead of N actually denominator is not an absolute. However, it is a universally accepted convention, perhaps among other things (besides the theoretical reasons cited by purists) and the fact that to calculate a dispersion of information is required at least two data, which is implicitly recognized by using N-1 in the denominator since this way is not possible to give N worth one without falling into a division by zero, the definition used N-1 removed from the scene any possible interpretation of σ with only one value. And another important reason has more to do with the reasons given by the purists is that the use of N-1 in the denominator has to do with something called the degrees of freedom in the analysis of variance ANOVA known as (Analysis of Variance ) used in the design of experiments (though that's get out a bit of subject we are discussing this document).

In descriptive statistics, which is carried out taking all values \u200b\u200bof a population of data and not taking a sample of that population, the most important of the graph of the relative frequencies of the data or histogram is the "area under the curve" rather than the formula of the curve to be pass by "height" of each data, the curve being used to find the mathematical probability of having a group of students from a range of scores, for example between 7.5 and 9.0, a mathematical probability value always lies between zero and unity. This is what is traditionally taught in textbooks.

However, before applying the statistical tables to carry out some probabilistic analysis of the area under the curve ", it is interesting how well the data fit a continuous curve can be drawn connecting the heights of the histogram. The formula that best describes a set of data which have been shown in the example is one that gives rise to the Gaussian curve . Then, to the following formula "Gaussian"

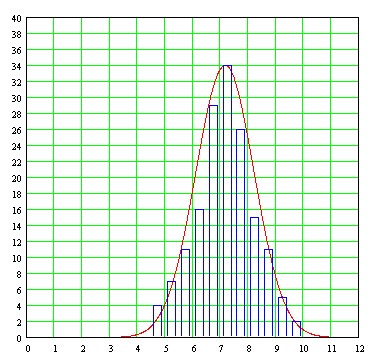

have the graph of the continuous curve drawn by this formula:

As can be seen, the curve indeed has the form of a bell, which derives a name with cuales es conocida.

Se puede demostrar, recurriendo a un criterio matemático conocido como el método de los mínimos cuadrados, que una fórmula general que modela una curva Gaussiana a un conjunto dado de datos con la apariencia de una "campana" es la siguiente:

Y resulta que µ es precisamente la media aritmética de la población de datos designada también como X , mientras que σ² es la varianza presentada por la población de datos. Esto signica que, para modelar una curva a un conjunto de datos como el que hemos estado manejando en el ejemplo, basta con calcular the mean and variance of the data, and put this information directly to the Gaussian formula, which will curve "best fit" (under the criterion of least squares) to the data. Parameter evaluation A no problem, since the curve must reach (but not exceeding) to a height of 34 (the number of students who represent the greater frequency with respect to other ranks of skills) so that the formula of the curve fitted to the data example is the following:

The graph of this Gaussian curve, superimposed on the graph bar that contains discrete data from which it was generated, is as follows:

We can see that the fit is reasonably good, considering the fact that in real life or experimental data never observed exactly fit the ideal Gaussian curve.

One thing which we must deal from the start, which almost never sufficiently clarified and explained well in the classroom is the fact that the general Gaussian formula not only allows positive values \u200b\u200bof X but also even allows values negative , which have no interpretation in the real world in cases like the one just see (on a grading system for students as we are assuming, any qualification can only vary from zero to ten minimum qualification as highest). In principle, X can range from X to X =- ∞ = + ∞. In many cases this is no problem, since the curve is rapidly approaching zero before X goes down to zero taking negative values, as in our example where the arithmetic is sufficiently far from X = 0 and dispersion of data is small enough to consider negative values \u200b\u200bof X as irrelevant, although the formula allows. But in cases in which the arithmetic mean X is too close to X = 0 and the data show a large dispersion, there is always the possibility that a complete end of the curve dropping the "other side" in the zone for which X takes negative values . If this happens, it could even force us to abandon the Gaussian model and other alternatives that will certainly be more unpleasant to handle from a mathematical point of view.

has provided a procedure to obtain the formula of a curve to connect the height of each bar of a histogram data showing the shape of the "bell", but it is important to clarify that individual points curve without real meaning, which is equivalent to say that a point as X = 7.8 for which the value of Y is equal to 28,595 is not something we should mean absolutely nothing, since it is the region under curve which makes sense, since the curve was generated from histogram bars that change from one interval to another. However, what we have done here is justified for comparative purposes because, before applying our notions of statistical data set using the Gaussian distribution about whether they want to make sure the data we analyze are shaped like a bell, because if data seem to follow a linear trend ever upward or if instead of a bell we have two bells (the latter occurs when data is accumulated from two different sources), would be wrong to try to force such data on a Gaussian distribution. It is important to add that we have seen the curve is not symmetrical distribution studied in statistical texts, as so we must normalize formula so that not only the arithmetic mean is shifted to the left the diagram to have a zero value to both sides being symmetrical with respect to X = o, but also the area under the curve has the value unity, this in order to give a performance curve probability as applied to non-descriptive statistics but inferential statistics in which a random sample trying to figure out the behavior of the data from a general population. In this normalization process derives its name from the curve as normal curve.

Before trying to invest time and effort to set a formula empirical data before doing any arithmetic, it is important to an early graphical data, as this is the first thing that must guide us in selecting the mathematical model to be used for modeling. In the case of distributions frequency as we've seen, which are represented by histograms, if doing a graph of the data we get something like the following:

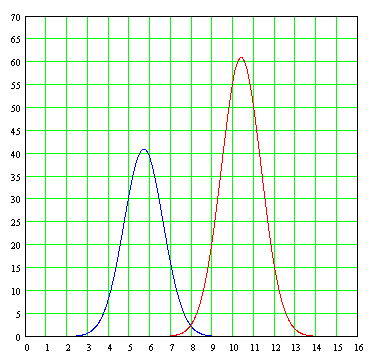

then if we can "force" the data to fall within a formula modeled on a continuous independent variable whose stroke is "fit" to the heights of the bars of the histograms, obtaining in this last example a setting like this (this adjustment is carried out simply by adding the expressions for two Gaussian curves with means other than by changing individual variances and amplitudes of each curve to the score):

this setting is a setting meaningless, since a chart like this, known as graphical bimodal, which has two "caps" peaks, is telling us that instead of having a population of data the same source we have is two populations of different origins data, data that came tucked into a single "package" at the hands of the analyst. This is when the analyst is almost forced to go to the "field" to see how and where data were collected. It is possible that the data represent the lengths of certain beams which were produced by two different machines. It is also possible These data have originated in an experiment in which he was testing the effect of a new type of fertilizer on yield of some crops and the fertilizer was being supplied to experimental plots for two different people in two different places, in which case there something that is causing a significant difference in the performance of the fertilizer and the effect it may have the fertilizer itself, either that both persons have been supplying different quantities of the same fertilizer, or the characteristics of different areas have caused Gaussian distribution altered the performance of each type of fertilizer.

For the continuous curve "double hump" shown above, this curve was drawn by the following formula score obtained by adding two Gaussian curves and setting the "top" of each curve to match the approximate-manipulating the arithmetic mean μ at each end-caps with each of the two top bars, modifying also the variance σ in each term for "open" or "close" the width of each fitted curve will:

Then we separated individual plots (not added) to each of the Gaussian curves shown in the formula, showing a probable range of data from two distinct populations of which came from the scrambled data.

In this example, it was easy to just view the histogram, the bar graph data-the presence of two Gaussian curves instead of one, thanks to the arithmetic mean of each curve (5.7 and 10.4) are separated by a margin of almost two to one. But we will not always so lucky, and there will be cases in which the arithmetic will be so close to each other that will be somewhat difficult for the analyst to decide if it considers all data as one or try to find two different curves, as would occur with a graph whose curve joining the heights of the bars would look like this:

is in cases like these in which the analyst must draw on all her wit and all his experience to decide if he tries to find two discernible groups of data in the data set at hand, or if it is not worth for the presence of two distinct populations riots in one, opting to perform modeling based on a single Gaussian formula.

Discovering the influence of unknown factors that may affect the performance of something as a fertilizer is just one of the primary objectives the design of experiments . In the design of experiments are not interested in carrying out a modeling of the data to a formula, that comes after it has been established unequivocally how many and what are the factors that can affect performance or response to something. Once passed this stage, we can collect data to carry out the adjustment of data to a formula. In the case of a bimodal distribution, instead of trying to set a formula to describe all data with a single distribution as we have seen, is much better to try to separate the data from the two different populations that are causing the "double hump camel ", a fact that can analyze two data sets separately with the assurance that for each data set we obtain a Gaussian distribution with a single hump. It can be seen from this that the formulas data modeling is a continuous cycle experimentation, analysis and interpretation of results, followed by a new cycle of experimentation and analysis and interpretation of new results to be improving a process or could go better describing the data being collected in a laboratory or field. The data modeling formula goes hand in hand with the procedures for the collection of the same.

PROBLEM : experimentally in the laboratory is the boiling point for some organic compounds known as alkanes (chemical formula C n H 2n +2 \u200b\u200b ) has the following values \u200b\u200bin degrees Celsius:

Methane (1 carbon atom): -161.7

ethane (2 carbons): -88.6

propane (3 carbon atoms): -42.1

butane (4 carbons): -0.5

pentane (5 carbon atoms): 36.1

hexane (6 carbon atoms): 68.7

heptane (7 carbons): 98.4

octane (8 carbon atoms): 125.7

Nonane (9 carbon atoms): 150.8

Dean (10 carbon atoms): 174.0

Make a graph of the data. Does it show any tendency the boiling point of these organic compounds according to the number of carbon atoms that have each compound?

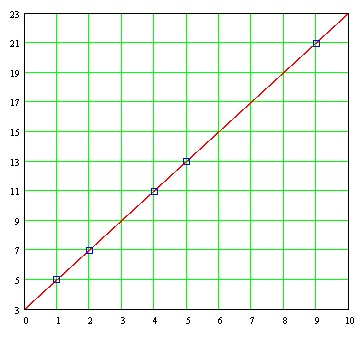

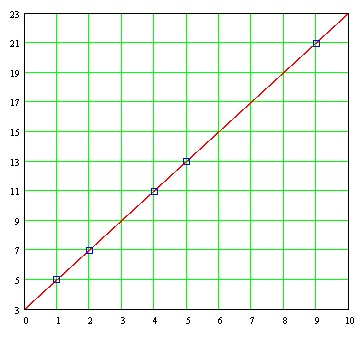

The graph of discrete data is as follows:

The graph we can see that the data seem to accommodate a super smooth continuous curve, following the cause-effect, which we suggests that behind this data is a natural law waiting to be discovered by us. Since the data do not follow a straight line, the relationship between them is not a linear relationship, is a non-linear relationship , and do not expect that the mathematical formula that is behind this curve is that of a line straight. In the absence of a theoretical model that allows us to have the exact formula, the graph arising from this data set is an excellent example of the places where we can try to adjust the data to an empirical formula, the better the data fit , the better we will suggest the nature of natural laws operating behind this phenomenon.

Although at first glance one thinks of the possibility of increasing all bodies used in the experiment to make the effect more intense attraction between them, no such thing can lead to out moving masses (red) without bursting the thin thread which hangs these weights. You can, however, increase the mass of blue, and this was precisely what he did Cavendish. Note that there are only two masses being attracted but two pairs allying mass, which increases the effect on the torsion balance. Either way, the enormous difficulties in obtaining a numerical value G reliable under this experiment will not increase our confidence in the value of G thus obtained.

However, a value of G obtained under these conditions and put in the formula does not guarantee that the formula will work as predicted by Newton for other conditions that are very different from the conditions used in the laboratory, which involved masses much greater distances. This formula is not the only one who can give us an attractive force between two masses decreases with increasing distance between the geometric centers of the masses. We can formulate a law that says: "Two bodies attract because direct product of their masses and inversely as the distance that separates them. "Note the absence of the term" square of the distance separating them. And we can make both formulas coincide numerically for some distance apart, but the variation in the strength of attraction given by the two formulas, it will become more and more evident as the masses are being brought closer or separated more and more. As both formulas, mathematically different, are not equally valid to describe the same phenomenon, one has to be discarded with the help of experimentally obtained data . Again we have to go to the lab. The confirmation of Newton's law is valid we get if we can measure the force of attraction for various distances by a graph of the results. For a variation inversely proportional to the square of the distance, the graph should be as follows:

one way or another, are laboratory experiments that help us to confirm or rule out any theory like this. And in experiments difficult by their very nature, which introduces a statistical random error that we can introduce experimental variation in each reading we take, we are almost obliged to collect the maximum amount of data to increase the reliability of the results, in which case the problem will be trying to draw any conclusion from the mass of data collected, because you can not expect all or perhaps none of the data will "fall" smoothly and accurately on a continuous curve. This forces us to try to find somehow the mathematical expression for a smooth, continuous curve, among many other possible ones, that best fits the experimental data.

case we have spoken this is the typical case in that before carrying out measurements in the laboratory and there is a theoretical model -a formula - waiting to be confirmed experimentally by measurements or observations made after the formula was obtained. But many other cases in which although experimental data, despite the unavoidable sources of variation and measurement errors seem to follow any law that can be adjusted to a theoretical model, such a formula does not exist, either because they have not found or perhaps because it is too complex to be stated in a few lines. In such cases the best we can do is carry out the fit of the data obtained experimentally to a empirical formula, a formula selected from many to be the one that best fits the data. The most notorious example of this today has to do with the alleged global warming on Earth, confirmed independently by several experimental data collected over several decades in many places around the Earth. Still no exact formula or even an empirical formula that allows us to predict the Earth's temperatures will be in later years if things continue as usual. All we have are graphs in which, broadly speaking, one can see a tendency towards a gradual increase in temperature, inferred from the data trend ( trend data), and even some of these data are cause controversial, such as temperature data registered in Punta Arenas, Chile, between 1888 and 2001:

According to the graph of this data which is set in a straight line of red that, mathematically speaking, represents the trend Data averaged over time, the temperature in that part of the world has been rising for over a century, but instead has been declining by a cumulative average of 0.6 degrees Celsius, contrary to the measurements have been carried out in other parts of the world. We do not know exactly why that happens there is different from that observed in other parts of world. Possibly there are interactions with sea temperatures, with the climatic conditions in that region of the planet, or even up to the rotation of the Earth, which are influenced to cause a fall rather than a rise in temperature observed at Punta Arenas. Anyway, despite ups and downs of the data, with such data is possible to obtain the straight line of red-superimposed on the data, which mathematically speaking is "fit" better than other lines to the average mobile data accumulated . Trends continue, this straight line allows us to estimate, between high and low that they occur in the data in subsequent years, average temperatures will short term in Punta Arenas in the years ahead. On these data, the line of best fit ( best fit) is a completely empirical formula for which there is as yet no theoretical model to support it. And just as there are many in this formula which is a mathematical model to simplify something that is being observed or measured.

Often, when carrying out the plotting of the data (the most important step prior to the selection of the mathematical model which will try to "adjust" the data) since before attempting to carry out the adjustment data to a formula we can detect the presence of an abnormality in the same due to an unexpected source of error that has nothing to do with the error of a statistical nature, as shown in the following graph of the temperatures of lakes in Detroit:

Note

carefully on this chart that there are two points that were not linked with lines for researchers to highlight the presence of a serious anomaly in the data. These are the points representing the end of 1999 and early 2000. As we begin 2000, the data show a "jump" disproportionate to the data prior history. Although we try to force all data are grouped under a certain trend predicted by an empirical formula, an anomaly as seen in this graph we practically cries out for explanation before being buried in such empirical formula. A review of the data revealed that, indeed, the "jump" disproportionate had to do with a phenomenon that was already expected at that time was going to happen with some computer systems are not prepared for the consequences of the change of digits in the date 1999 to 2000, then dubbed as the Y2K phenomenon (an acronym for the phrase " Y ear 2000" where k symbolizes thousand). The discovery of this effect gave rise to an exchange of explanations documented in places like the following:

http://www.climateaudit.org/?p=1854

http://www.climateaudit.org/?p=1868

This exchange for clarification led to the same U.S. space agency NASA to correct their own data, taking into account the effect Y2k, and corrected data displayed on the following site:

http://data.giss.nasa.gov/gistemp/graphs/

Fig.D.txt

The examples we have seen have been instances in which the experimental data despite variations in the same setting allows them to approximate a mathematical formula or even allow the detection of some error in gathering them. But there are many other occasions in which to carry out the data to plot the presence of a trend is not at all obvious, as shown in the following sample data collected on the frequency of sunspots (which may have some effect on global warming of the Earth):

In the graph of the data has been superimposed a red line under a statistical mathematical criterion represents the line of best fit to the data. But in this case this line is clearly an obvious decline, including online almost seems to be a horizontal line. If you delete line, the data seem so scattered that have chosen a straight line to try to "bundle" the trend of data seems rather an act of faith than scientific objectivity. It may be no reason to expect a statistically significant change on the frequency of sunspots over the course of several centuries or even several thousand years, given the enormous complexity of the nuclear processes that keep the Sun in constant activity. This last example demonstrates the enormous difficulties that face any researcher trying to analyze a set of experimental data on which there is any theoretical model.

The data set formulas is of vital importance always bear in mind the law of cause and effect . In the case of the law of universal gravitation, as set forth by an exact formula, assuming the masses of two bodies as unchanged, then a variation of the distance between the masses will have a direct effect on the strength of gravitational attraction is between them. A series of experimental data on a chart positions will confirm this. And even in cases where there is an exact model, we can (or rather, we need to) make a cause-effect relationship for a model of two variables can have some meaning. Such is the case when performing measurements of stature between students of different grades of elementary school. In this case, the average heights for each grade students will be different, it will increase as increasing the grade, for the simple fact that students at this age are growing in stature every year. In short, the higher the grade, the higher the average height of students who expect to find in a group. This is a cause-effect relationship. In contrast, if we find a direct relationship between the temperature of a city for one day of the year and the number of pets that people have in their homes, most likely will not find any relationship and come out empty handed because there is no reason to expect that the average number of pets per household (case) may have some influence on the temperature (effect), and if so, this effect would be negligible by mathematically smallness.

we have seen cases involve situations based on natural phenomena which we can carry out measurements in the laboratory or outside the laboratory using something as simple as a thermometer or as an amateur telescope. But there are many other cases in which it is not necessary to conduct measurements, because more than getting data in the lab what is needed is a mathematical model that allows us to make a projection or prediction with data already on hand, as data from a census or a survey. An example is the expected growth of Mexico's annual population. The national population census is to be in charge of obtaining figures on the population of Mexico, so that to try to make a prediction on the expected population growth in future years all we have to do is go to National Institute of Statistics, Geography and Informatics (INEGI) for the results of previous censuses. It is assumed that in these census figures are not exact, there is no reason to expect such a thing, given the enormous number of variables that have to deal with workers who must carry out the census and the changing day to day can affect the "reality" of the census. Even assuming that the census could be carried out exactly, we would be another problem. If we plot the population ratios of 5 years (for example), there would be no problem in making future predictions based on past data if the data when plotted fall all in a "straight line". The problem is that, when plotted almost never fall on a straight line, usually grouped around what appears to be a curve . Here we try to "adjust" the data to a Various formulas and use the one that best approximates all the data you already have, for which we need a mathematical-statistical approach that is less subjective as possible. It is precisely for this so we require the principles to be discussed here.

The data adjustment formulas, there are cases in which it is not necessary to go into detailed mathematical calculations for the simple reason that for such cases have been obtained and formulas that only require the calculation of simple things such as the arithmetic mean (often designated as μ, the Greek letter mu equivalent of the Latin letter "m", so on average arithmetic) data and standard deviation σ (the Greek letter sigma , the equivalent of the Latin letter "s" so the "standard") of them. We are referring to adjust the data to a Gaussian curve . One such adjustment is applied to situations where instead of having a dependent variable and whose values \u200b\u200bdepend on the values \u200b\u200bthat can make an independent variable X on which perhaps we can exercise some control data set in which what matters is the frequency with which data are collected are located within certain ranges. An example would be grades in certain subjects in a group number of 160 students whose scores show a distribution as follows:

Between 4.5 and 5.0: 4 studentsThis type of distribution, when plotted, statistically show a tendency to reach a peak in a curve that resembles a bell. The first calculation that we made on such data is the arithmetic average or arithmetic mean defined as

Between 5.0 and 5.5: 7 students

Between 5.5 and 6.0: 11 students

Between 6.0 and 6.5: 16 students

Between 6.5 and 7.0: 29 students

Between 7.0 and 7.5: 34 students

Between 7.5 and 8.0: 26 students

Between 8.0 and 8.5: 15 students

Between 8.5 and 9.0: 11 students

Between 9.0 and 9.5: 5 students

Between 9.5 and 10.0: 2 students

The way in which data are presented, we must make a slight modification in our calculations to obtain the average arithmetic them, using as the representative value of each interval the average value between the minimum and maximum of each interval. Thus, the representative value of the interval between a score of 4.5 and 5.0 is 4.75, the representative value in the range between 5.0 and 5.5 is 5.25, and so on. Each of these values \u200b\u200brepresentative of each interval we have to give weight "fair" that belongs in the calculation of the mean multiplied by the frequency with which it occurs. Thus, the value 4.75 is multiplied by 4 since that is the frequency with which it occurs, and the value 5.25 is multiplied by 7 since that is the frequency with which it occurs, and so on. Thus, the arithmetic mean of the population of 150 students will:

X = [(4) (4.75) + (7) (5.25) + (11) (5.75) +. .. (9.75) (2)] / 160

X = 7,178

X = 7,178

Having obtained the average arithmetic X , the next step would be to obtain the dispersion of these data with respect to the arithmetic average, through a calculation and variance σ ² in which we average the sum of the squares of the differences d i of each data with respect to the arithmetic mean for the variance σ ² population data:

Σd ² = 4 ∙ (4.75-7.178) ² + 7 (5.25-7.178) ² + ... + 2 ∙ (2-7178) ²

178,923

Σd ² = σ ² =

Σd ² / N = 1,118

178,923

Σd ² = σ ² =

Σd ² / N = 1,118

with which we can obtain the standard deviation σ population data (also known as the root mean square deviation-of-range data to the arithmetic mean) with the simple operation of taking the square root of variance: σ

= √ 1118 = 1057

worth noting that the standard deviation σ evaluated for a sample taken at random from a population has a slightly different definition of the standard deviation σ evaluated on all data the total of the population. The standard deviation σ sample of a population is obtained by replacing the term N in the denominator N-1, because the words of the "purists" value obtained is a better estimate of the standard deviation of the population from which the sample was taken. However, for sufficiently large sample (N greater than 30), there is little difference between the two estimates of σ . Anyway, when you want to get a "best estimate" can always be obtained by multiplying the standard deviation we have defined √ N/N-1 . It is important to bear in mind that σ is a somewhat arbitrary measure of data dispersion, is something that we have defined ourselves, and we use N-1 instead of N actually denominator is not an absolute. However, it is a universally accepted convention, perhaps among other things (besides the theoretical reasons cited by purists) and the fact that to calculate a dispersion of information is required at least two data, which is implicitly recognized by using N-1 in the denominator since this way is not possible to give N worth one without falling into a division by zero, the definition used N-1 removed from the scene any possible interpretation of σ with only one value. And another important reason has more to do with the reasons given by the purists is that the use of N-1 in the denominator has to do with something called the degrees of freedom in the analysis of variance ANOVA known as (Analysis of Variance ) used in the design of experiments (though that's get out a bit of subject we are discussing this document).

In descriptive statistics, which is carried out taking all values \u200b\u200bof a population of data and not taking a sample of that population, the most important of the graph of the relative frequencies of the data or histogram is the "area under the curve" rather than the formula of the curve to be pass by "height" of each data, the curve being used to find the mathematical probability of having a group of students from a range of scores, for example between 7.5 and 9.0, a mathematical probability value always lies between zero and unity. This is what is traditionally taught in textbooks.

However, before applying the statistical tables to carry out some probabilistic analysis of the area under the curve ", it is interesting how well the data fit a continuous curve can be drawn connecting the heights of the histogram. The formula that best describes a set of data which have been shown in the example is one that gives rise to the Gaussian curve . Then, to the following formula "Gaussian"

have the graph of the continuous curve drawn by this formula:

As can be seen, the curve indeed has the form of a bell, which derives a name with cuales es conocida.

Se puede demostrar, recurriendo a un criterio matemático conocido como el método de los mínimos cuadrados, que una fórmula general que modela una curva Gaussiana a un conjunto dado de datos con la apariencia de una "campana" es la siguiente:

Y resulta que µ es precisamente la media aritmética de la población de datos designada también como X , mientras que σ² es la varianza presentada por la población de datos. Esto signica que, para modelar una curva a un conjunto de datos como el que hemos estado manejando en el ejemplo, basta con calcular the mean and variance of the data, and put this information directly to the Gaussian formula, which will curve "best fit" (under the criterion of least squares) to the data. Parameter evaluation A no problem, since the curve must reach (but not exceeding) to a height of 34 (the number of students who represent the greater frequency with respect to other ranks of skills) so that the formula of the curve fitted to the data example is the following:

The graph of this Gaussian curve, superimposed on the graph bar that contains discrete data from which it was generated, is as follows:

We can see that the fit is reasonably good, considering the fact that in real life or experimental data never observed exactly fit the ideal Gaussian curve.

One thing which we must deal from the start, which almost never sufficiently clarified and explained well in the classroom is the fact that the general Gaussian formula not only allows positive values \u200b\u200bof X but also even allows values negative , which have no interpretation in the real world in cases like the one just see (on a grading system for students as we are assuming, any qualification can only vary from zero to ten minimum qualification as highest). In principle, X can range from X to X =- ∞ = + ∞. In many cases this is no problem, since the curve is rapidly approaching zero before X goes down to zero taking negative values, as in our example where the arithmetic is sufficiently far from X = 0 and dispersion of data is small enough to consider negative values \u200b\u200bof X as irrelevant, although the formula allows. But in cases in which the arithmetic mean X is too close to X = 0 and the data show a large dispersion, there is always the possibility that a complete end of the curve dropping the "other side" in the zone for which X takes negative values . If this happens, it could even force us to abandon the Gaussian model and other alternatives that will certainly be more unpleasant to handle from a mathematical point of view.

has provided a procedure to obtain the formula of a curve to connect the height of each bar of a histogram data showing the shape of the "bell", but it is important to clarify that individual points curve without real meaning, which is equivalent to say that a point as X = 7.8 for which the value of Y is equal to 28,595 is not something we should mean absolutely nothing, since it is the region under curve which makes sense, since the curve was generated from histogram bars that change from one interval to another. However, what we have done here is justified for comparative purposes because, before applying our notions of statistical data set using the Gaussian distribution about whether they want to make sure the data we analyze are shaped like a bell, because if data seem to follow a linear trend ever upward or if instead of a bell we have two bells (the latter occurs when data is accumulated from two different sources), would be wrong to try to force such data on a Gaussian distribution. It is important to add that we have seen the curve is not symmetrical distribution studied in statistical texts, as so we must normalize formula so that not only the arithmetic mean is shifted to the left the diagram to have a zero value to both sides being symmetrical with respect to X = o, but also the area under the curve has the value unity, this in order to give a performance curve probability as applied to non-descriptive statistics but inferential statistics in which a random sample trying to figure out the behavior of the data from a general population. In this normalization process derives its name from the curve as normal curve.

Before trying to invest time and effort to set a formula empirical data before doing any arithmetic, it is important to an early graphical data, as this is the first thing that must guide us in selecting the mathematical model to be used for modeling. In the case of distributions frequency as we've seen, which are represented by histograms, if doing a graph of the data we get something like the following:

then if we can "force" the data to fall within a formula modeled on a continuous independent variable whose stroke is "fit" to the heights of the bars of the histograms, obtaining in this last example a setting like this (this adjustment is carried out simply by adding the expressions for two Gaussian curves with means other than by changing individual variances and amplitudes of each curve to the score):

this setting is a setting meaningless, since a chart like this, known as graphical bimodal, which has two "caps" peaks, is telling us that instead of having a population of data the same source we have is two populations of different origins data, data that came tucked into a single "package" at the hands of the analyst. This is when the analyst is almost forced to go to the "field" to see how and where data were collected. It is possible that the data represent the lengths of certain beams which were produced by two different machines. It is also possible These data have originated in an experiment in which he was testing the effect of a new type of fertilizer on yield of some crops and the fertilizer was being supplied to experimental plots for two different people in two different places, in which case there something that is causing a significant difference in the performance of the fertilizer and the effect it may have the fertilizer itself, either that both persons have been supplying different quantities of the same fertilizer, or the characteristics of different areas have caused Gaussian distribution altered the performance of each type of fertilizer.

For the continuous curve "double hump" shown above, this curve was drawn by the following formula score obtained by adding two Gaussian curves and setting the "top" of each curve to match the approximate-manipulating the arithmetic mean μ at each end-caps with each of the two top bars, modifying also the variance σ in each term for "open" or "close" the width of each fitted curve will:

Then we separated individual plots (not added) to each of the Gaussian curves shown in the formula, showing a probable range of data from two distinct populations of which came from the scrambled data.

In this example, it was easy to just view the histogram, the bar graph data-the presence of two Gaussian curves instead of one, thanks to the arithmetic mean of each curve (5.7 and 10.4) are separated by a margin of almost two to one. But we will not always so lucky, and there will be cases in which the arithmetic will be so close to each other that will be somewhat difficult for the analyst to decide if it considers all data as one or try to find two different curves, as would occur with a graph whose curve joining the heights of the bars would look like this:

is in cases like these in which the analyst must draw on all her wit and all his experience to decide if he tries to find two discernible groups of data in the data set at hand, or if it is not worth for the presence of two distinct populations riots in one, opting to perform modeling based on a single Gaussian formula.

Discovering the influence of unknown factors that may affect the performance of something as a fertilizer is just one of the primary objectives the design of experiments . In the design of experiments are not interested in carrying out a modeling of the data to a formula, that comes after it has been established unequivocally how many and what are the factors that can affect performance or response to something. Once passed this stage, we can collect data to carry out the adjustment of data to a formula. In the case of a bimodal distribution, instead of trying to set a formula to describe all data with a single distribution as we have seen, is much better to try to separate the data from the two different populations that are causing the "double hump camel ", a fact that can analyze two data sets separately with the assurance that for each data set we obtain a Gaussian distribution with a single hump. It can be seen from this that the formulas data modeling is a continuous cycle experimentation, analysis and interpretation of results, followed by a new cycle of experimentation and analysis and interpretation of new results to be improving a process or could go better describing the data being collected in a laboratory or field. The data modeling formula goes hand in hand with the procedures for the collection of the same.

PROBLEM : experimentally in the laboratory is the boiling point for some organic compounds known as alkanes (chemical formula C n H 2n +2 \u200b\u200b ) has the following values \u200b\u200bin degrees Celsius:

Methane (1 carbon atom): -161.7

ethane (2 carbons): -88.6

propane (3 carbon atoms): -42.1

butane (4 carbons): -0.5

pentane (5 carbon atoms): 36.1

hexane (6 carbon atoms): 68.7

heptane (7 carbons): 98.4

octane (8 carbon atoms): 125.7

Nonane (9 carbon atoms): 150.8

Dean (10 carbon atoms): 174.0

Make a graph of the data. Does it show any tendency the boiling point of these organic compounds according to the number of carbon atoms that have each compound?

The graph of discrete data is as follows:

The graph we can see that the data seem to accommodate a super smooth continuous curve, following the cause-effect, which we suggests that behind this data is a natural law waiting to be discovered by us. Since the data do not follow a straight line, the relationship between them is not a linear relationship, is a non-linear relationship , and do not expect that the mathematical formula that is behind this curve is that of a line straight. In the absence of a theoretical model that allows us to have the exact formula, the graph arising from this data set is an excellent example of the places where we can try to adjust the data to an empirical formula, the better the data fit , the better we will suggest the nature of natural laws operating behind this phenomenon.

PROBLEM: Given the following distribution of the diameters of the heads of rivets (expressed in inches) made by some company and the frequency f with which they occur:

representative a total of 250 measurements, adjusting a Gaussian curve to these data. Also, make the outline of a bar graph of the data superimposing Gaussian curve on the same graph.

For Gaussian curve, the first step is to obtain the arithmetic mean of the data:

By the way which are presented the data, we have to make a slight modification in our calculations to obtain the arithmetic mean of the same, using as the representative value of each interval the average value between the minimum and maximum of each interval. Thus, the representative value in the range between .7247 and .7249 is .7248, the representative value in the range between .7250 and .7252 is .7251, and so on. Each of these values \u200b\u200brepresenting each interval weight we must give "fair" that belongs in the calculation of the mean multiplied by the frequency with which it occurs. Thus, the value .7248 will be multiplied by 2 since this is the frequency con la cual ocurre, y el valor .7251 será multiplicado por 6 puesto que esa es la frecuencia con la cual ocurre, y así sucesivamente. De este modo, la media aritmética de la población de 250 datos será:

Tras esto obtenemos la desviación estándard σ calculando primero la varianza σ 2, also using in our calculations here the representative values \u200b\u200bof each interval and the frequency with which each such case values:

With this we all we need to produce the Gaussian curve fitted to the data. The height of the curve is selected to coincide with the bar (representing Data Range), which also has the highest, which is still the diameter range between .7262 and .7264 inches with a "height" of 68 units. Thus, the graph, using a "high" for the Gaussian curve of 68 units is as follows:

The Gaussian curve fit to the data does not seem as "ideal" as we wanted. This is about something more fundamental than the fact that the arithmetic mean X of data (72 642 inches) is not identical to the representative point of the range of values \u200b\u200b(7263) in which it occurs as often of the 68 observations ( and emphasized here as being of greater importance than in real life is very rare time in which the maximum of the calculated curve coincides with the arithmetic value is more likely to be the arithmetic average ) , let alone the fact that the bar chart has been drawn without each bar extends to touch with its neighboring bars. If you look around the distribution of the data, we see that the distribution data are more loaded bar to the right than left. Ideal Gaussian curve we have been driving is a perfectly symmetrical curve, with the same amount of data or observations distributed to the right of the vertical axis of symmetry to the left. This asymmetry is known as bias ( skew) or lean precisely because the original data is loaded more than one side than the other, that is precisely what makes the "top" of the distribution of rods in graph does not coincide with the arithmetic mean of the data. And although there is a theorem in statistics called the Central Limit Theorem (Central Limit Theorem ) tells us that the sum of a large number of independent random variables will be normally distributed (Gaussian) with increasing amount data or observations, taking more and more readings will not necessarily make the data are adjusted to become more symmetrical curve, this will not happen if there are substantive reasons why more data is loaded to one side than the other. This is a situation that the ideal Gaussian curve is not prepared to handle, and if we want to accurately adjust a curve to data in which we would expect an ideal Gaussian behavior then we need to modify the Gaussian curve becoming more complex formula, using a the multiply trick as the amplitude of the curve by some factor that makes their decline is not as "soft" either right or left. Unfortunately, the use of such tricks are often no theoretical justifications to explain the amendment to the modeled curve, are simply a resource for fine adjustment. This is when the experimenter or data analyst has to decide if the goal is really trying to justify the resort to such tricks, but have their way, do not help improve our understanding of what is happening behind a accumulation of data. There

representative a total of 250 measurements, adjusting a Gaussian curve to these data. Also, make the outline of a bar graph of the data superimposing Gaussian curve on the same graph.

For Gaussian curve, the first step is to obtain the arithmetic mean of the data:

By the way which are presented the data, we have to make a slight modification in our calculations to obtain the arithmetic mean of the same, using as the representative value of each interval the average value between the minimum and maximum of each interval. Thus, the representative value in the range between .7247 and .7249 is .7248, the representative value in the range between .7250 and .7252 is .7251, and so on. Each of these values \u200b\u200brepresenting each interval weight we must give "fair" that belongs in the calculation of the mean multiplied by the frequency with which it occurs. Thus, the value .7248 will be multiplied by 2 since this is the frequency con la cual ocurre, y el valor .7251 será multiplicado por 6 puesto que esa es la frecuencia con la cual ocurre, y así sucesivamente. De este modo, la media aritmética de la población de 250 datos será:

X = [2∙(.7248) + 6∙(.7251) + 8∙(.7254) + ... + 4∙(.7278) + 1∙(.7281)]/250

X = 181.604/250

X = .72642 pulgadas

X = 181.604/250

X = .72642 pulgadas

Tras esto obtenemos la desviación estándard σ calculando primero la varianza σ 2, also using in our calculations here the representative values \u200b\u200bof each interval and the frequency with which each such case values:

Σd ∙ ² = 2 (-. 7248 72 646) ² + 6 (7251 -. 72 642) ² + ... + 1 ∙ (.7281 -. 72 642) ²

Σd ² = 0.000082926

σ = Σd ² ² / N = 0.00008292/250 = 0.000000331704

σ = .00057594 inches

Σd ² = 0.000082926

σ = Σd ² ² / N = 0.00008292/250 = 0.000000331704

σ = .00057594 inches

With this we all we need to produce the Gaussian curve fitted to the data. The height of the curve is selected to coincide with the bar (representing Data Range), which also has the highest, which is still the diameter range between .7262 and .7264 inches with a "height" of 68 units. Thus, the graph, using a "high" for the Gaussian curve of 68 units is as follows: